Since OpenAI first introduced image generation into ChatGPT with DALL·E 2 and later DALL·E 3, users have been able to create visuals directly inside the app. These earlier models, built on diffusion techniques similar to those used in Stable Diffusion models, could produce interesting images but often struggled with accuracy, especially when rendering text or maintaining stylistic consistency across outputs (and to be fair, text rendering still has its issues).

That changed with the introduction of a new image generator native to GPT-4o. This updated model is the first to integrate image generation directly into ChatGPT’s core architecture rather than layering it on top. As a result, the updated GPT-4o brings a noticeable jump in quality and usability. It better understands creative intent, supports iterative editing in conversation, and delivers more coherent, accurate, and flexible outputs.

GPT-4o replaces DALL·E 3 as the engine powering image creation inside ChatGPT. However, this isn't just a new name for the same process. The GPT-4o image generator is a multimodal, autoregressive model that handles text, images, and audio inputs and outputs natively.

The result? Better prompt understanding, stronger visual coherence, and a surprising leap in rendering accuracy. GPT-4o can now follow complex instructions, replicate visual layouts, and iterate consistently, making it feel less like a paintbrush and more like a collaborative design partner.

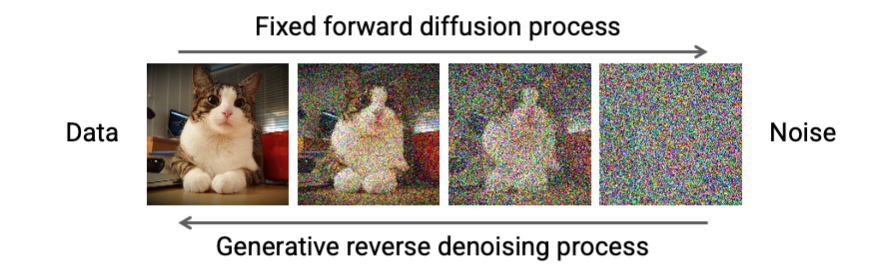

Traditional models like Stable Diffusion, developed by Stability AI, and DALL·E, developed by OpenAI, use a diffusion process to both train their models and generate output from user prompts. When prompted, a diffusion model will gradually transforms random noise into an image by reversing the noise step by step. While powerful, these models struggle with precision, especially when rendering text, maintaining consistency across images, or interpreting high-level instructions.

GPT-4o takes a different approach by combining autoregressive planning with diffusion rendering. In short, it "thinks" about what it's generating before it renders, allowing it to understand layout, character positioning, text alignment, and object relationships in a way traditional diffusion models can’t.

This hybrid approach is why GPT-4o can:

GPT-4o allows users to modify and extend images within the same conversation. Want to swap the lighting, add a logo, or make a character wave instead of point? You can ask in plain language, and GPT-4o will build on what it previously created. This gives marketers and communicators a continuity of vision across outputs.

That capability unlocks a wide range of real-world applications. You can generate packshots for an e-commerce launch, iterate logo designs across color palettes, or test different ad concepts for a carousel campaign, all without leaving the ChatGPT interface. GPT-4o can do that mid-conversation, maintaining the original layout and style.

With this leap forward, the ability for marketing and comms leaders to leverage generative AI for visual content has expanded significantly. GPT-4o can create visual workflows that once required multiple tools, handoffs, and rounds of back-and-forth feedback. Now, this can all be streamlined in one place, meaning faster campaigns, tighter brand alignment, and more room for creativity at scale.

The holy grail here is building prompted visual systems: image libraries, storyboards, and visual series that evolve with direction, not randomness. When you combine GPT-4o's multimodal image generation with conversational memory, structured prompting, and feedback along the way, you are creating images that resonate with your initial prompt ideas and building scalable visuals that stay consistent across channels and campaigns.

Imagine:

These outcomes not only give teams more control, coherence, and consistency, they also enable a faster and more flexible way to produce visuals no matter the moment or medium.

GPT-4o blurs the lines between ideation, design, and production. It streamlines workflows by collapsing multi-step visual processes into a single prompt. With its ability to combine text, visuals, and memory, GPT-4o isn’t just a creative tool, it’s a full-service visual system.

Here’s what that unlocks:

We’re entering the age of creative direction at scale. As GPT-4o image generation becomes available via API and gets integrated with platforms like Sora for video, what you can do with one prompt today will soon extend to sequences, storylines, and motion.

If you want to understand how image generation went viral and why it matters for brand teams, read the original newsletter here.

Curious about Claude’s recent web search addition, how ChatGPT is integrating with company drives, or the Gemini versus Qwen model wars? We covered that too.

See how it all started and make sure to subscribe for future newsletters!